Blog for July 2021 Seminar: Ethical Artificial Intelligence

This seminar dealt with the complex issue of ethical artificial intelligence and ontologies. The speaker was Ahren E. Lehnert, a Senior Manager with Synaptica LLC, a provider of ontology, taxonomy and text analytics products for 25 years – http://www.synaptica.com

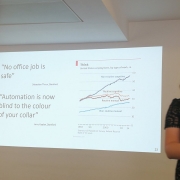

The central focus of Ahren’s talk was on the relationship between ethics, artificial intelligence and ontologies. Arificial Intelligence (AI) in practice means machine learning leading to content tagging, recommendation engines and terror and crime prevention. It is used in many industries including finance and insurance, job applicants selection, development of autonomous vehicles and artistic creativity. However, we must be careful because there are some outstanding examples of ‘bots behaving badly’. For example, Microsoft’s chatbox, Tay, learned language from interaction with Twitter users. Unfortunately, Twitter ‘trolls’ taught Tay anti-semitic, racist and misogynistic language. Tay was closed down very quickly. Here we are in the territory of ‘ghosts in the machine’ – is that photo really an image of (say) Arnold Schwarzenegger (actor and politician) or is it somebody else who is posing as him or who just happens to look very much like him. More difficult is when you encounter an image of somebody that you know is dead (say) Peter Cushing (actor) whose photo may have been edited into an image that suits a particular project or viewpoint. Are we OK or not OK with these things. It does matter.

Information professionals frequently encounter machine learning – https://en.wikipedia.org/wiki/Machine_learning

Now, however much we may want to go “all in” on machine learning, most companies have not worked out how to “de-silo and clean their data”. Critically, there are five steps to predictive modelling : 1) get data; 2) clean, prepare and manipulate data; 3) train model; 4) test data; 5) improve. We must be sanguine about the results. We will not build a ‘saviour machine’ (!). Machine learning basics include : 1) the need for big data; 2) the need to look for patterns; 3) the need to learn from experience; 4) the need for good examples; 5) the need to take time. We can find good and bad examples of machine learning and we can use the examples of science fiction as portrayed in television and film. For example, ‘Star Trek’ portrays stories depicting humans and aliens serving in Starfleet who have altruistic values and are trying to apply these ideals in difficult situations. Alternatively, ‘Star Wars’ depicts a galaxy containing humans and aliens co-existing with robots. This galaxy is bound together by a mystical power known as ‘The Force’. ‘The Force’ is wielded by two major knightly orders – the Jedi (peacekeepers) and the Sith (aggressors). Conflict is endemic. So bad examples of machine learning (where machine learning fails) arise from insufficient, inaccurate or inconsistent data; finding meaningless patterns; lack of time spent by data scientists on improving machine learning models; the model is a ‘black box’ which users ‘don’t really understand’; unstructured text is difficult.

What is the source of biases which are making their way into machine learning ? Well, people generate context and people have biases to do with : language; ideas; coverage; currency and relevance. Taxonomies are constructed to reflect an organizational viewpoint. They are built from content which can be flawed. The coverage can have topical skews. They can be built by a single taxonomist or a team. The subject matter expertise can be wanting. Furthermore, Text Analytics is ‘inherently difficult’ : language; techniques; content. Algorithms in machine learning models depend on training data which must be accurate and current with good coverage. Here is a quote from Jean Cocteau – “The course of a river is almost always disapproved of by its source”. Is the answer an ontology ?

What is ethical AI ? What does it mean ? It means being Transparent, Responsible and Accountable. Transparent – Both ML and AI outcomes are explainable.

Responsible – Avoiding the use of biased algorithms or biased data.

Accountable – Taking action ‘to actively curate data, review and test’.

FAST Track Principles – Fairness, Accountability, Sustainability, Transparency.

Whose ethics do we use – the ethics of Captain Kirk from ‘Star Trek’ or the ethics of HAL the computer from ‘2001 A Space Odyssey’. We are back with our earlier ‘Star Trek’ / ‘Star Wars’ conundrum. How will these ethics work out in practice ? How will we reach consensus. How do we define what is ethical and in what context ? Who will write the codes of conduct ? Will it be government ? Will it be business ? Who will enforce the codes of conduct ?

What are the risks given AI in practice ? Poor business outcomes; unintended consequences; mistrust of technology; weaponization of AI technology; political and/or social misinformation; deepfakes; skynet.

Steps towards ethical AI. Steps to success within the organization. Conduct risk assessments; understand social concerns; data sources and data sciences; invest in legal resources; industry and geo-specific regulatory requirements; tap into external technological expertise. There will be goals and challenges to overcome. There should be an ethical AI manifesto or guidelines. An ethical AI manifesto will identify corporate values; align with regulatory requirements; involve the entire organization; communicate the process and the results; nominate a champion. Many existing frameworks of AI Ethics guidelines are vague formulations with no enforcement mechanisms. So,to get started on the AI programme we must clearly define the problem : what do you want to do ? Why do you want to do it ? What do you expect the outputs to be and what will you do with them ? We must seek to ‘knowledge engineer’ the data to provide a controlled perspective and construct a ‘virtuous content cycle’. We aim for a definitive source for ontologies – authoritative, accurate and objective. Pay particular attention to labelling, quality data and training data. Get the data and create trust in the consuming systems and their resulting analytics and reporting. Use known metrics. Remember that governance applies to business and technical processes.

Rob Rosset 26/07/2021